March 18 Zulip Cloud security incident

Tim Abbott

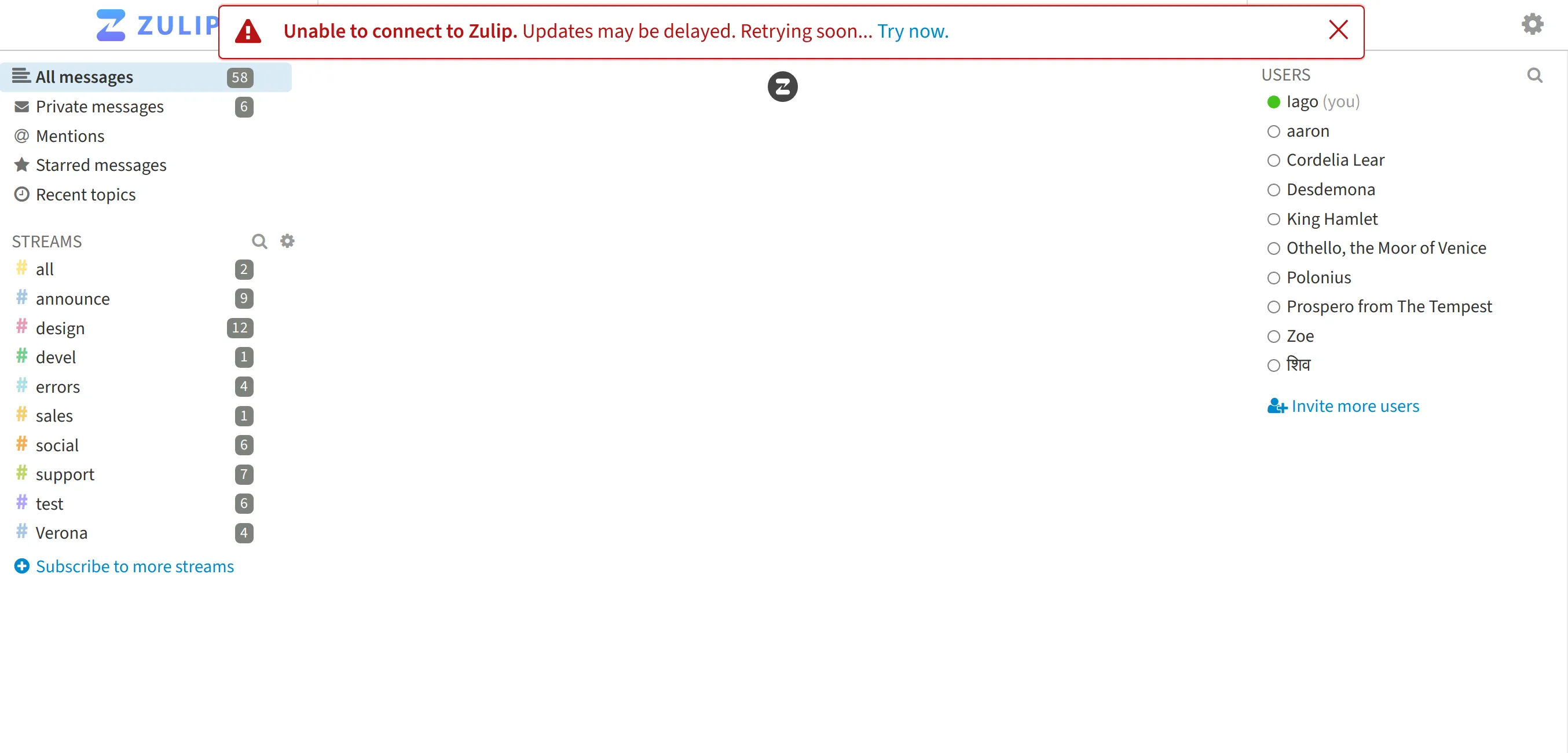

Tim Abbott On Thursday, March 18, 2021, Zulip Cloud had an important security incident. In short, a subtle caching bug resulted in up to 149 users being shown a broken read-only version of the Zulip UI from one of 26 other users whose data was incorrectly cached.

This malfunctioning interface did not display messages, but did display sensitive metadata, including stream names, names of other users in the organization, unread counts, etc. Users in this state could click around to see additional metadata, like topic names, email addresses, and settings. Attempts to fetch messages or change state, like sending messages, would fail with authentication errors.

I’d like to apologize for this incident on behalf of the entire Zulip team. As a company that cares deeply about security and privacy, we’ve put a lot of thought into preventing accidental data leaks, and so we’re very disappointed by this incident. Thankfully, this incident affected only a small number of users, but we’ll be spending a lot of time internally discussing how to make something like this never happen again.

This incident was caused by a bug, not a hack, and we are not aware of any malicious behavior in relation to this incident. The rest of this blog post explains this incident and its impact in full detail.

Incident summary

Like many production incidents, this incident was caused by an effort to improve the product—in this case, moving Zulip Cloud behind Amazon CloudFront, which will significantly improve Zulip Cloud’s network performance for our international users.

On Thursday, March 18 around 17:51 (US Pacific time), we started sending all Zulip Cloud traffic through CloudFront. Our engineers observed a high volume of unusual errors, with the first error at 17:53 according to our logs. We rolled back the CloudFront change at 18:20, although this took some additional time to take effect for users on some networks. By 19:00, the rollback had stopped additional users from being affected. A small number of affected users continued seeing incorrect behavior, mainly being redirected to another organization’s login page, until this residual effect was fully resolved at 22:49.

Was my account affected?

- Our logs allow us to identify all 26 users whose metadata was served to the wrong user as part of this incident. If you were one of those 26 users, or an administrator of their organization, we have contacted you by email.

- Only users who had Zulip open during the incident (17:51 to 18:46 US Pacific time on March 18) could be affected.

- All affected users were logged out at 21:14 US Pacific time. However, the vast majority of users we logged out were not affected.

If you have any questions about whether your account or organization was affected, please contact support.

User impact details

Our logs allow us to understand the total impact of this bug in detail.

-

149 times, users opening the Zulip web app were served data intended for another user in their region who had opened the web app at about the same time. This manifested in two different ways:

-

Some users were shown the broken read-only Zulip UI for another user, with an infinite loading indicator where messages would be. Attempts to fetch additional data or change state, like sending messages, would fail with authentication errors.

It would theoretically have been possible for a malicious and technically skilled user who found themselves in this state to use the session cookie to get a normal logged-in Zulip UI and make changes or read message content. (This vulnerability was closed when we logged out users at 21:14). We’ve studied our logs to investigate whether this theoretical possibility occurred, and we believe it did not.

-

Some users were incorrectly redirected from the login page or logged-out homepage of their Zulip organization to that of another organization (e.g. example.zulipchat.com → somethingelse.zulipchat.com/login or vice versa). These users had the confusing experience of being unable to log in to their Zulip organization.

-

-

236 times, users interacting with the Zulip web or mobile apps in a way that fetched message history were served data for another user. Despite message history being sent to the wrong clients, our testing suggests that these users would have seen only a generic connection error and Zulip apps could not display the unexpected responses, because they referenced users/streams/etc. not present in the app’s metadata.

-

3175 times, browser clients attempting to fetch real-time updates (“events”) from the server received data intended for another user. These payloads are very small (often functionally empty or containing a single message), and would have been similarly invisible to the user.

What was the bug?

The main job of a CDN like CloudFront is caching (though it also improves performance in other subtle ways). This incident was caused by subtle bugs in our CloudFront configuration causing it to cache data it should not have.

CloudFront caching works as follows. First, when your browser sends a request to Zulip Cloud—say, for the JavaScript code that runs the Zulip web app—instead of sending the request all the way to the Zulip Cloud servers located on the US East Coast, your browser makes the request to a nearby CloudFront server. Amazon operates CloudFront servers in numerous places around the world, so if you’re located anywhere other than the US East Coast, that’s a much shorter trip.

If that CloudFront server doesn’t already have the data your browser is looking for, it will turn around and ask Zulip Cloud for the data and pass it on—but it will also keep a copy, to have it ready the next time someone requests it. Then, the next time someone requests that data, it can send that copy right back—which, depending where you are, may be much faster than it’d take to get it all the way from Zulip Cloud’s own servers. In much of Asia, this can be as much as about a full second faster.

Naturally, this is only a good thing if the data the CloudFront server has to offer you is the right data to answer your request. For data like the JavaScript code of the web app—which is the same for every user, and only changes when we deploy a new version—this works great.

On the other hand, for data like the list of people, list of streams, and other details of your Zulip organization, the data is different from user to user. For those requests, it doesn’t make sense for CloudFront to try to keep a copy, or to send you a copy it saved from a previous request. However, CloudFront can make these requests to Zulip Cloud across a faster network than your browser can, which also makes it useful even for those requests it shouldn’t cache.

In this incident, a small fraction of requests to Zulip Cloud had the responses incorrectly cached by CloudFront, which then served copies of the data to other users. The root cause was a subtle combination of bugs in our CloudFront configuration and quirks in how CloudFront behaves.

The technical details

Zulip servers (whether Zulip Cloud or self-hosted) have long used the standard

Cache-Control, Expires, and Vary HTTP headers to tell browsers, proxies,

and CDNs like CloudFront to avoid caching HTTP responses that shouldn’t be

cached. However, a combination of CloudFront quirks meant that in the

(essentially default) configuration we deployed, for a small portion of

requests, CloudFront would ignore all of those headers and serve cached

responses anyway. It is absolutely our error in not configuring CloudFront

correctly, but we need to explain CloudFront’s quirks to explain what happened.

Zulip generally uses the following bundle of HTTP headers to prevent incorrect caching:

Cache-Control: max-age=0, no-cache, no-store, must-revalidate, private

Date: [current date]

Expires: [current date]

Vary: Cookie, Accept-LanguageVary

The purpose of the Vary header is to indicate that the response would have

been different if the given request headers had been changed, and so a cached

response should only be served for a request where those request headers are

identical. Since Zulip authenticates users using a session identifier

transmitted in a cookie, Vary: Cookie should prevent a user from seeing cached

responses intended for a different user.

However, although CloudFront

parses and passes along

certain parts of the Vary header to the viewer, it does not respect the Vary

header when making its own caching decisions (except the specific

wildcard value

Vary: *), relying instead on

explicit configuration—the

default of which

does not include

the equivalent of Vary: Cookie:

By default, CloudFront doesn’t consider cookies when processing requests and responses, or when caching your objects in edge locations. If CloudFront receives two requests that are identical except for what’s in the

Cookieheader, then, by default, CloudFront treats the requests as identical and returns the same object for both requests.

Now, CloudFront was configured with its “Managed-AllViewer” origin request policy. The choice of origin request policy dictates which headers are sent to the origin, and “Managed-AllViewer” is the only managed policy that passes through any cookies sent between viewer and the origin, as necessary for a user to authenticate to Zulip at all.

Unfortunately, the origin request policy does not affect CloudFront’s default behavior of treating requests with different cookies as identical for the purposes of caching. A request including an authenticated user’s session cookie may generate a response with data intended for that user, and if that response were to be cached and reused for a request including a different session cookie, this would leak the first user’s data to the second user.

Cache control

Despite Vary being ignored, this leak still wouldn’t be possible if the

responses were not cached at all. Indeed, Zulip separately marks its responses

as non-cacheable, both to prevent private data from being sent to the wrong

user, and also to prevent stale data from being sent even to the right user.

Indicating that a response is not cacheable is the purpose of Zulip’s

Cache-Control header:

max-age=0: the response must be considered stale immediately (0 seconds) after the request.no-cache: the response must be revalidated with the origin server before being served again.no-store: the response must not be stored in any cache.must-revalidate: the response must be revalidated with the origin server if it’s stale.private: the response must not be stored in a cache that might be shared between multiple users.

Additionally, an Expires header equal to the current Date also indicates

that the response must be considered immediately stale, for caches that don’t

understand the newer Cache-Control header.

CloudFront

usually respects

the Cache-Control and Expires headers, but with one

critical exception

when receiving multiple similar requests at roughly the same time:

CloudFront normally respects a

Cache-Control: no-cacheheader in the response from the origin. For an exception, see Simultaneous Requests for the Same Object (Traffic Spikes).

By design, some of Zulip’s background HTTP requests take a long time before the

server sends a response—this dramatically reduces the number of requests needed

to poll for new messages and the latency when they arrive. When CloudFront sees

a large number of these intentionally-delayed responses at roughly the same

time, it interprets this situation as a “traffic spike” and starts serving

cached responses despite the Cache-Control headers directing otherwise.

Result

The combination of these quirks meant that some of the server’s responses were both incorrectly cached and delivered to an incorrect user. Because of the way CloudFront was matching similar requests with each other, these incorrectly-cached responses were only actually served for a handful of high-traffic Zulip HTTP GET endpoints (detailed in the impact section, above), and then only for the small fraction of those requests which CloudFront treated as part of a “traffic spike” in the region.

The nondeterministic and load-dependent nature of this bug fortunately meant that only a small number of users were affected, and that a malicious adversary would not have had an opportunity to target specific users or organizations for attack. But it also made the bug much harder to catch before it impacted users, and harder to debug once it had done so.

Zulip response and timeline

We took the following technical steps to resolve this incident (all times are US Pacific time on Thursday, March 18):

- At 17:51, we made the DNS change to put CloudFront in front of Zulip Cloud.

- At 17:53, the incident affected the first user.

- At 18:20, we undid the transition to CloudFront by reverting the DNS change that enabled it. However, despite a short (60s) TTL, some clients were still connecting to CloudFront hours later due to DNS caching.

- At 18:41, we purged CloudFront’s cache. This did not end the incident, because clients with cached DNS could still connect to the misconfigured CloudFront instance.

- At 18:46, we reconfigured CloudFront to respect

Vary: CookieandCache-Controlheaders correctly, so that any users with cached DNS that were still connecting to CloudFront would get correct behavior. - At 19:00, we analyzed summary data from CloudFront. Comparing that with later data, only 3 erroneous cache hits from CloudFront (all of the “/login redirects” variety) happened after 19:00.

- At 21:14, we invalidated all user sessions that had been accessed since before the start of the incident, which logged those users out.

- Also at 21:14, we announced the incident via Twitter.

- At 22:49, we purged CloudFront’s cache for a final time, which fixed the

“/login redirect” issue for the final small number of users. These had not

been fixed at 18:46 because those redirects are not served with

Cache-Controlheaders, and thus the redirect to the wrong realm was still cached.

We’ve directly contacted those users whose accounts were incorrectly accessed during this incident, as well as the administrators of their realms.

Closing

Once again, we’d like to apologize for this incident. Keeping user data safe is the single most important thing we do, and we consider this category of incident unacceptable. We hope you’ve found this incident report helpful in understanding what went wrong and your organization’s exposure.

We’d like to thank Kate Murphy for responsibly reporting behavior related to this incident to our documented security contact and helping verify the 22:49 fix.